Next: About this document ...

I started my career working in convex & discrete geometry, and computational geometry.

My work in the combinatorics and geometry of convex polytopes is well-known. In my dissertation, I solved an open question of well-known mathematicians Gelfand, Kapranov and Zelevinsky by finding an example of a non-regular triangulation of the cartesian product of two simplices and later worked very deeply on the problem of finding

optimal triangulations of polyhedra (e.g., I proved that the minimum triangulation of the dodecahedron has 23 tetrahedra).

My first book (joint with Rambau and Santos) Triangulations: Structures for Algorithms and Applications published by Springer in 2010, is a thorough reference about triangulations of polyhedra. I have also published many noteworthy contributions to the problems of computing volumes and integrals of polyhedra, and about counting lattice

points. The list of applications of these computational problems is very large, algebraic geometry and representation theory

(toric varieties, Clebsch-Gordan coefficients) to combinatorics, computer algebra, and statistics (E.g., in joint work with Liu and Yoshida we found the first ever closed-form combinatorial formula for the volumes and of the Birkhoff polytope ![]() , i.e., the convex hull of all

, i.e., the convex hull of all

![]() permutation matrices). I have also contributed to understanding Ehrhart polynomials and the well-known software LattE

was started under my direction and initiative, introducing dozens of undergraduates to research.

permutation matrices). I have also contributed to understanding Ehrhart polynomials and the well-known software LattE

was started under my direction and initiative, introducing dozens of undergraduates to research.

Although I continue to be interested in my earlier topics,

most of my work in the past seven years has been in the applied

area of integer and combinatorial optimization. This is the

part of applied mathematics related to optimizing functions over

discrete sets. A famous example of such problem is the

traveling salesman problem: Given ![]() cities that must be

visited only once, and the costs

cities that must be

visited only once, and the costs ![]() of flying from city

of flying from city ![]() to

city

to

city ![]() , the goal is to find the best order to visit all the given

cities in order to minimize the total cost of the trip.

, the goal is to find the best order to visit all the given

cities in order to minimize the total cost of the trip.

I have been one of the leaders of a new approach to the theory of optimization. In this new point of view, tools from algebra, topology and geometry, that were considered too pure and unrelated to applications, are used to prove unexpected computational or structural results. My new second book (joint with Hemmecke and Köppe) Algebraic and Geometric Ideas in the theory of Discrete Optimization is the first compilation of results from this novel approach collecting the work of several mathematicians. My own work has provided fundamental new developments taking place in the area of discrete optimization which I summarize here:

We extended the Lenstra's and Khachiyan's results, we answered the question in a paper appeared in Mathematics of Operations Research: We proved that while the problem is NP-hard, even for fixed dimension 2, there is a new approximation algorithm to maximize an arbitrary integral polynomial over the lattice points of a convex rational polytope with a fixed dimension. The algorithm produces a sequence of upper and lower bounds for the global optimal value. Surprisingly this is an excellent approximation scheme. The new method codifies the (exponentially many) lattice point solutions, as a short (polynomial-size) multivariate rational generating function (sum of quotients of polynomials). This result was nominated to various awards the year of 2006 when it was published, including the Fulkerson Prize. Later Hemmecke, Köppe and I showed that igives a similar result for multiobjective optimization.

Graver bases are in fact connected to commutative algebra and algebraic geometry, they are a universal

Gröbner bases for the toric ideal associated to the matrix ![]() .

During the past fifteen years, algebraic geometry and commutative

algebra tools have been shown exciting potential to study problems in

integer optimization. But, in the past, algebraic methods had always been

considered ``guilty'' of bad computational complexity, namely, the

notorious bad complexity for computing general Gröbner bases when

the number of variables grow. Our exciting new

research demonstrated that, by carefully using

the structure of matrix

.

During the past fifteen years, algebraic geometry and commutative

algebra tools have been shown exciting potential to study problems in

integer optimization. But, in the past, algebraic methods had always been

considered ``guilty'' of bad computational complexity, namely, the

notorious bad complexity for computing general Gröbner bases when

the number of variables grow. Our exciting new

research demonstrated that, by carefully using

the structure of matrix ![]() , Graver bases can compete (and win!!!)

against mainstream tools in optimization.

The paper appeared in the journal Discrete Optimization:

, Graver bases can compete (and win!!!)

against mainstream tools in optimization.

The paper appeared in the journal Discrete Optimization:

Fix any pair of integer matrices ![]() and

and ![]() with the same number of

columns, of dimensions

with the same number of

columns, of dimensions ![]() and

and ![]() , respectively. The

n-fold matrix of the ordered pair

, respectively. The

n-fold matrix of the ordered pair ![]() is the following

is the following

![]() matrix,

matrix,

![\begin{displaymath}[A,B]^{(n)} \quad:=\quad ({\bf 1}_n\otimes B)\oplus (I_n\otim...

...dots \\

0 & 0 & 0 & \cdots & A \\

\end{array}\right)\quad .

\end{displaymath}](img29.png)

The number of variables grow, but we can prove

Theorem Fix any integer matrices ![]() of sizes

of sizes

![]() and

and ![]() , respectively. Then there is a polynomial

time algorithm that, given any

, respectively. Then there is a polynomial

time algorithm that, given any ![]() and any integer vectors

and any integer vectors ![]() and

and

![]() , solves the corresponding n-fold integer programming problem.

, solves the corresponding n-fold integer programming problem.

The key ingredient to make it work comes from commutative algebra.

What happens is that for every pair of integer matrices

![]() and

and

![]() , there exists a

constant

, there exists a

constant ![]() such that for all

such that for all ![]() , the Graver basis of

, the Graver basis of

![]() consists of vectors with at most

consists of vectors with at most ![]() the number

nonzero components. This means that it suffices to compute the Graver

bases of a small

the number

nonzero components. This means that it suffices to compute the Graver

bases of a small ![]() -fold matrix to be able to obtain others.

More recently we were also able to extend the above theorem to the

optimization of maximization convex functions instead of just optimizing linear

functions.

-fold matrix to be able to obtain others.

More recently we were also able to extend the above theorem to the

optimization of maximization convex functions instead of just optimizing linear

functions.

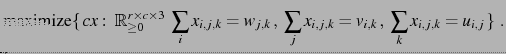

Back in 1986 several open problems were proposed by Vlach and co-authors. 20 year later, in our paper published in the SIAM Journal of Optimization, S. Onn and I solved most of them as easy corollaries of the following theorem

Theorem: (De Loera-Onn) Any integer programming problem

![]() is in fact polynomial-time representable as a

is in fact polynomial-time representable as a ![]() -way transportation

problem with

-way transportation

problem with ![]() -marginals

-marginals ![]() depending polynomially on the

binary size of the input

depending polynomially on the

binary size of the input ![]() :

:

By saying that an integer program is representable as another we mean that the solution spaces are identical and one can transfer one into the other efficiently. Thus, one can interpret our theorem as a ``normal form'' or ``canonical reformulation'' algorithm. So, any integer or linear programming problem can be put in the special form of a multiway transportation problems for arrays of 3 indices with line sums prescribed.

``These two papers introduce a pioneering new approach for proving the optimality of solutions to combinatorial optimization problems. The approach begins by encoding the instance and an objective bound as a system of polynomial equations over a finite field. Then, using Hilbert's Nullstellensatz, the authors demonstrate the effectiveness of a computational method to certify the infeasibility of the polynomial system. The encoding is derived and analyzed for a number of problem classes, such as the k-coloring, stable set, longest cycle, largest planar subgraph, and minimum edge coloring problems. While the degree of the certifying polynomial could be exponentially large, the authors demonstrate that in many cases of interest the degree is low enough to allow for explicit, fast computations. The authors also develop a variety of computational enhancements, including computing over finite fields, exploiting symmetry, adding redundant equations, and applying alternative Nullstellensätze that allow them to solve 3-coloring problems significantly faster than existing methods.

In this impressive work, the authors take up a mathematical machinery that seemed very unlikely to be useful in practice and turn it into a useful computational algorithm. This work is likely to stimulate additional research into computational polynomial methods, perhaps placing them on the same footing as polyhedral techniques for solving combinatorial optimization problems.''

Most recently, I have been an active contributor in the study of the complexity of linear optimization algorithms, most notably, the simplex method and interior point methods. The central path is the key object in interior point methods algorithms and software. In joint work with Vinzant and Sturmfels, we solved that 20-year-old problem, by giving explicit exact algebraic formulas for the central path, determined the degree, and genus of the curve. These invariants, along with the degree of the Gauss image of the curve, are expressed in terms of a matroid of the input data. As an application we give an instance-specific bound of the total curvature of the central path, a quantity relevant for the performance of interior point methods. The key mathematical ``liaison'' we managed to established is that the differential geometry of a central curve is intimately connected to that of the underlying matroid of the constraints.

Another significant contribution regards the geometry of the simplex method. It is of course a long-standing open question to bound the diameter of the graphs of convex polyhedra. It is well-known in linear optimization that bounding the diameter is important for the performance of the simplex, which searches the graph of the polyhedron. Fields medalist Steven Smale has listed the problem of finding a strongly polynomial algorithm for linear programming, possibly via a polynomial pivot rule for the simplex method, as one of the key mathematical problems in the 21st century. Billera and Provan had proposed in 1979 a conjecture that if true would have proved a linear bound to the diameter of all polyhedra, namely twice the number of facet defining inequalities. The idea relies on a clever decomposition of simplicial complexes by a sequence of removals of lower dimensional faces. In a very recent paper published in Mathematics of Operation Research in 2012 (joint work with former postdoc Steve Klee), we solve their open question about their method to bound the diameter of convex polyhedra on the negative. We disproved the conjecture showing that the conjecture fails in a 4-dimensional example coming from transportation optimization problems. The construction is very elegant and uses topological tools (e.g., simplicial complexes deletion and contraction of edges).